“Premature Optimization is the Root of All Evil”

How to efficiently load clips from a timeline in a DAW, even though you really don’t need to.

originally published 2024-09-06 on Recurse Center

zulip

I’d heard this Knuth quote but never really connected with it or dug into the source material (though Knuth Tome I has been gazing at me intently from my shelf for some time), but got a little taste of what he might’ve meant recently.

Problem

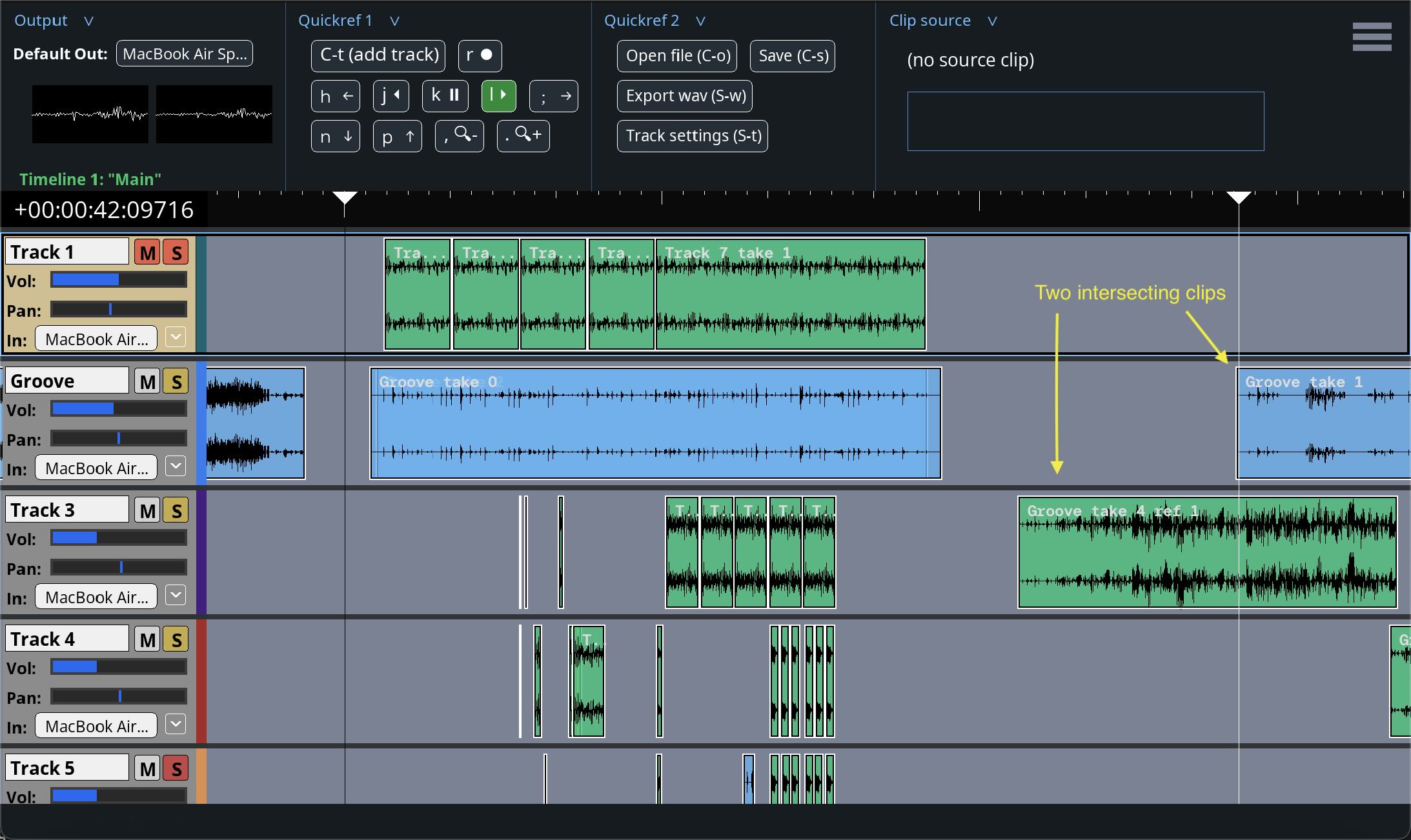

In Jackdaw, each Track contains an array of clips

(actually “clip references,” type ClipRef).

When the program assembles

a chunk (typically 2048 sample frames) of mixed-down audio for

playback, every single clip is checked for intersection with the

chunk.

That seems like a lot of work! The visual nature of the program also makes it feel superfluous. I can easily see which clips intersect the playhead, so why can’t the program just check those? If I have tons of clips on my project, won’t it take forever to search through them every time a new chunk is needed (many times a second)?

My aesthetic displeasure with the brute force solution – which of course has been working fine for me thus far – led me to spend some time thinking about it and planning a better solution. It’s a challenging problem because of the variable length of clips. A clip way back at time 0 might still need to be checked when we’re at the 30m mark, because that clip may be more than 30m long; so data structures based on a naive notion of clip locality are inadequate.

Solution

The solution involves maintaining two sorted arrays of clips on each track – one ordered by the clips’ starting positions, and one by their ending positions – as well as a cache of currently “active” clips.

When playback commences in the forward direction, all clips with starting positions up to the playhead position are checked to see if they are of sufficient duration to intersect with the playhead. If so, they are added to the “active” clip cache. During playback, each time a chunk is requested, the next clip in the sorted list is checked to see if it ought to be added to the active clip cache. We keep checking the next clip until one is not added. Then, only active clips are read for mixdown. When the end position of a clip is reached, it is dropped from the active clip cache. Any time the playhead position “jumps” (user clicks somewhere on the timeline or invokes a “jump to” command) the active clip cache must be reset; but this is only necessary when there is a jump.

The second array sorted in reverse order of clip end position is necessary to apply the same process for reverse playback.

I had filed this solution away a month or so ago, and decided it

would be a good time to implement it earlier this week. I spent way

more time than I expected getting binary search insertion right;

it’s something I’ve probably done before and expected to be quick

and easy, but perhaps due to haste, I took a wrong turn and got

really frustrated with myself. I used libc qsort for a

couple other scenarios (e.g. when moving a bunch of clips from one

track to another) and the name of Knuth stared out at me sternly

from the man page. Surveying several other parts of the program that

would have to change, I got an itch about how necessary all of this

really was.

How necessary all of this really was

I crudely timed a few different sections of the mixdown logic without the search optimization. Turns out that, even with ~500 clips in the project, the search time is dwarfed by a single FFT-based effect.

In retrospect, this is not surprising at all; 500 is a huge number of clips to have in a DAW project (I think – DAW users weigh in please), but is not a huge number of computer operations to run every time a mixdown chunk is needed (every ~0.04 seconds). The problem seemed worse than it was because clips look “heavy”; I overestimated the cost of the brute force search because I subconsciously and incorrectly implicated the scale of clip data (i.e., the actual audio data associated with the clip) in the search process, while the only actually relevant parameters are a couple integers (start and end position).

None of this was “evil.” It was an interesting problem, and there may come a time when it’s worthwhile to implement this optimization. But I have no shortage of interesting problems to work at, so perhaps it wasn’t the best use of my time.